The debate around AI accelerator vs GPU has become increasingly important as artificial intelligence continues to reshape industries. From machine learning training to real-time inference, hardware selection plays a critical role in performance, efficiency, and scalability. Understanding the difference between an AI accelerator vs GPU is essential for businesses, developers, and researchers working with modern AI systems.

In this in-depth guide, we will explore the AI accelerator vs GPU comparison, examine their architectures, performance characteristics, use cases, and help you decide which option best suits your AI workloads.

Understanding AI Accelerator vs GPU

To fully grasp the AI accelerator vs GPU debate, it is important to understand what each technology is designed to do.

What Is an AI Accelerator?

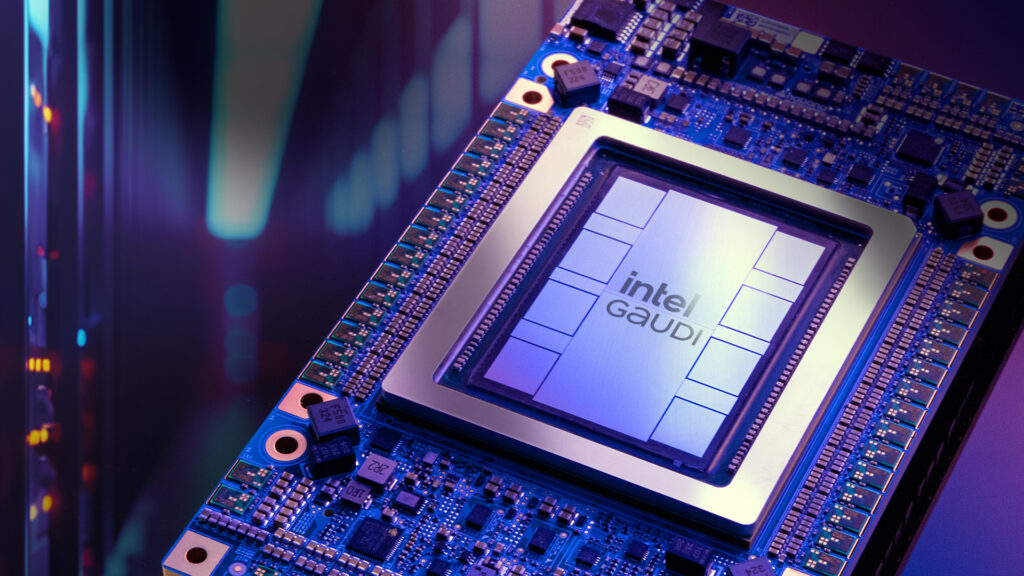

An AI accelerator is specialized hardware specifically designed to accelerate artificial intelligence workloads such as neural network inference and training. Unlike general-purpose processors, AI accelerators are optimized for tensor operations, matrix multiplication, and parallel computation.

Common examples of AI accelerators include TPUs (Tensor Processing Units), NPUs (Neural Processing Units), FPGAs, and ASICs designed exclusively for AI tasks.

What Is a GPU?

A GPU (Graphics Processing Unit) was originally designed for rendering graphics but has evolved into a powerful parallel processor. GPUs are widely used in AI due to their ability to process thousands of operations simultaneously, making them suitable for training deep learning models.

When comparing AI accelerator vs GPU, GPUs are more versatile, while AI accelerators are more specialized.

AI Accelerator vs GPU Architecture Differences

The architectural design is one of the most significant distinctions in the AI accelerator vs GPU comparison.

GPU Architecture Explained

GPUs consist of thousands of small cores optimized for parallel processing. This architecture makes GPUs excellent for handling diverse workloads such as graphics rendering, gaming, scientific computing, and AI training.

Key architectural features:

SIMD (Single Instruction, Multiple Data)

High memory bandwidth

General-purpose programmability

AI Accelerator Architecture Explained

AI accelerators are built with AI-specific operations in mind. Their architecture often includes dedicated tensor cores, systolic arrays, or custom pipelines that maximize efficiency for neural network computations.

Key architectural features:

Fixed or semi-fixed function units

Optimized tensor processing

Lower overhead for AI inference

In the AI accelerator vs GPU discussion, architecture directly influences efficiency and performance.

AI Accelerator vs GPU Performance Comparison

Performance is one of the most critical factors when evaluating AI accelerator vs GPU.

Training Performance

GPUs dominate AI model training due to their flexibility and strong software ecosystem. They support popular frameworks like TensorFlow, PyTorch, and JAX with extensive optimization.

AI accelerators can also handle training but are often limited to specific frameworks or model architectures.

Inference Performance

AI accelerators shine in inference tasks. When comparing AI accelerator vs GPU for inference, accelerators typically offer:

Lower latency

Higher throughput

Better performance per watt

This makes AI accelerators ideal for deployment in data centers, edge devices, and embedded systems.

AI Accelerator vs GPU Power Efficiency

Power consumption is a major consideration in modern AI infrastructure.

GPU Power Efficiency

GPUs are powerful but energy-intensive. Training large AI models on GPUs can significantly increase electricity costs, especially at scale.

AI Accelerator Power Efficiency

AI accelerators are designed for energy efficiency. In the AI accelerator vs GPU comparison, accelerators often consume less power while delivering comparable or better inference performance.

This efficiency makes AI accelerators attractive for mobile devices, IoT systems, and cloud inference services.

AI Accelerator vs GPU Cost Analysis

Cost plays a crucial role when choosing between AI accelerator vs GPU.

GPU Cost Factors

Higher upfront hardware cost

Greater power and cooling requirements

Broad usability across workloads

AI Accelerator Cost Factors

Lower operational cost for inference

Limited flexibility

Potential vendor lock-in

In large-scale deployments, the AI accelerator vs GPU decision often comes down to total cost of ownership rather than initial price.

AI Accelerator vs GPU Software Ecosystem

Software compatibility is another major differentiator in the AI accelerator vs GPU discussion.

GPU Software Support

GPUs benefit from mature ecosystems, including:

CUDA and OpenCL

Extensive AI libraries

Strong developer community

This makes GPUs easier to adopt for experimentation and research.

AI Accelerator Software Support

AI accelerators often require custom SDKs or proprietary tools. While improving, their ecosystems are still more restrictive compared to GPUs.

For developers, the AI accelerator vs GPU choice may depend on how much flexibility they need.

AI Accelerator vs GPU Use Cases

Understanding real-world use cases helps clarify the AI accelerator vs GPU debate.

When to Use a GPU

AI model training

Research and experimentation

Multi-purpose workloads

Graphics-intensive applications

When to Use an AI Accelerator

AI inference at scale

Edge computing

Low-power environments

Dedicated AI pipelines

In many enterprises, a hybrid approach using both AI accelerator vs GPU technologies delivers the best results.

AI Accelerator vs GPU in Data Centers

Modern data centers increasingly rely on both GPUs and AI accelerators.

GPUs handle training and complex workloads, while AI accelerators optimize inference and reduce energy consumption. This complementary approach highlights that AI accelerator vs GPU is not always an either-or decision.

AI Accelerator vs GPU for Edge Computing

Edge computing emphasizes low latency and power efficiency. In the AI accelerator vs GPU comparison, AI accelerators are better suited for edge deployments due to their compact size and optimized performance.

AI Accelerator vs GPU Future Trends

The future of AI hardware will continue to evolve.

Emerging trends include:

Hybrid AI chips

More programmable AI accelerators

Increased integration of AI accelerators into CPUs

Rather than replacing GPUs, AI accelerators will likely coexist, further shaping the AI accelerator vs GPU landscape.

AI Accelerator vs GPU: Which Should You Choose?

Choosing between AI accelerator vs GPU depends on your specific needs:

Choose a GPU if you need flexibility, training capability, and strong software support.

Choose an AI accelerator if you prioritize inference efficiency, scalability, and lower power consumption.

Understanding your workload is key to making the right AI accelerator vs GPU decision.

FAQs About AI Accelerator vs GPU

What is the main difference between AI accelerator vs GPU?

The main difference between AI accelerator vs GPU is specialization. GPUs are general-purpose processors, while AI accelerators are designed specifically for AI tasks.

Is an AI accelerator better than a GPU?

In the AI accelerator vs GPU comparison, AI accelerators are better for inference and power efficiency, while GPUs excel in training and versatility.

Can AI accelerators replace GPUs?

AI accelerators are unlikely to fully replace GPUs. Instead, AI accelerator vs GPU technologies will continue to complement each other.

Which is more cost-effective: AI accelerator vs GPU?

AI accelerators are often more cost-effective for inference at scale, while GPUs provide better value for mixed workloads.

Are GPUs still relevant for AI?

Yes, GPUs remain essential for AI training and development despite the rise of AI accelerators.

Conclusion

The AI accelerator vs GPU debate reflects the rapid evolution of artificial intelligence hardware. Both technologies offer unique advantages, and the best choice depends on performance requirements, cost considerations, and deployment scenarios. By understanding the strengths and limitations of AI accelerator vs GPU solutions, organizations can build smarter, more efficient AI systems.